Amongst the various technologies that are used to provide data access to the users in communication networks and to aid in communication among the users Google has devised a new method of inputting commands. The method would use hand gestures to input into a wearable computing device. The hand gestures would be used to indicate or signify or identify or classify the content within the data that would be important and would require notice or attention.

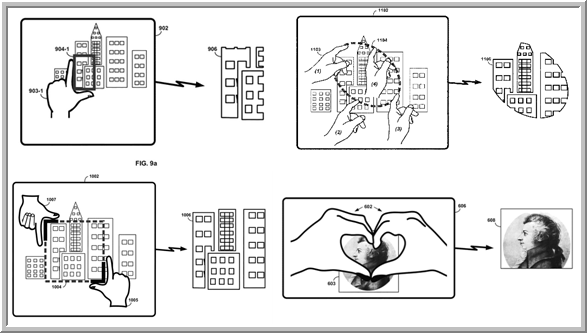

The computing device would include a HMD (Head Mount Display) and a video camera to identify the gesture made and carry out the actions accordingly. The gestures could be used to select a particular portion from the complete field of view and also to generate images from the portion selected. The generated images would be transmitted by HMD to other applications in the network server connected with HMD.

The abstract of US8558759B1 (Filled: July 8, 2011 and Granted: October 15, 2013), reads:

“In accordance with example embodiments, hand gestures can be used to provide user input to a wearable computing device, and in particular to identify, signify, or otherwise indicate what may be considered or classified as important or worthy of attention or notice. A wearable computing device, which could include a head-mounted display (HMD) and a video camera, may recognize known hand gestures and carry out particular actions in response. Particular hand gestures could be used for selecting portions of a field of view of the HMD, and generating images from the selected portions. The HMD could then transmit the generated images to one or more applications in a network server communicatively connected with the HMD, including a server or server system hosting a social networking service.”

Some of the gestures included in this application comprise of joining two hands to form a symbolic heart would determine video data from video camera of HMD. This would generate an image of the area between the bounded hands. The another gesture is of thumb and a forefinger of a particular hand symbolizing a right angle, that would move from first location to the second location in the field of view (FOV) to determine the closed area with in FOV. Forefinger of a particular hand forming a close loop shape in FOV would be the third gesture.

The shapes identified with in the closed area in FOV would act as instructions (according to the predetermined or programmed) and the device would execute them accordingly to generate results. The gestures would act as a manual functionality aspect for Google glass and trigger the settings.